Publications

For full list see google scholar.

PhD Thesis: Deep Exploration via Randomized Value Functions

Statistically efficient RL requires "deep exploration".

Previous approaches to deep exploration have not been computationally tractable beyond small scale problems.

This dissertation presents an alternative approach through the use of randomized value functions.

Statistically efficient RL requires "deep exploration".

Previous approaches to deep exploration have not been computationally tractable beyond small scale problems.

This dissertation presents an alternative approach through the use of randomized value functions.

Ian Osband

PhD Thesis 2016

[Stanford link] [Mirror] [YouTube]

Deep Exploration via Randomized Value Functions

Journal paper that takes the best parts of my PhD thesis, and lots of other pieces of work from our group over the past few years.

Journal paper that takes the best parts of my PhD thesis, and lots of other pieces of work from our group over the past few years.

Ian Osband, Benjamin Van Roy, Daniel Russo, Zheng Wen

Journal of Machine Learning Research 2018

[Paper]

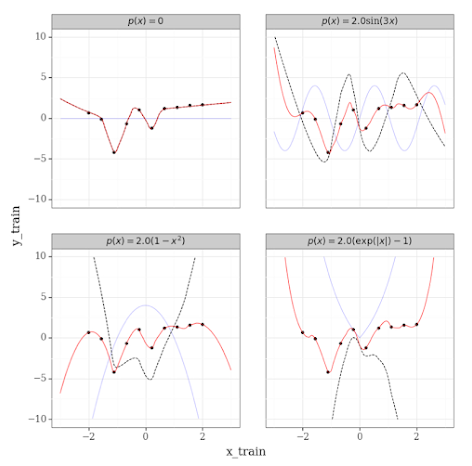

Randomized Prior Functions for Deep Reinforcement Learning

Bootstrapping for uncertainty estimates has an obvious flaw in "sparse reward" environments... why wouldn't you just always predict zero?

We provide a simple solution: add a random untrainable "prior function" to each ensemble member.

Matches Bayesian inference for linear systems, good empirical results scaling to Montezuma's revenge!

Bootstrapping for uncertainty estimates has an obvious flaw in "sparse reward" environments... why wouldn't you just always predict zero?

We provide a simple solution: add a random untrainable "prior function" to each ensemble member.

Matches Bayesian inference for linear systems, good empirical results scaling to Montezuma's revenge!

Ian Osband, John Aslanides, Albin Cassirer

NeurIPS 2018

[Website] [Paper] [Code] [Poster] [Spotlight]

Scalable Coordinated Exploration in Concurrent Reinforcement Learning

Imagine you have a team of agents working in parallel, how can you do efficient exploration for the group as a whole?

Turns out that "randomized value functions" scale to this domain in a natural way... if you do it in the right way!

Imagine you have a team of agents working in parallel, how can you do efficient exploration for the group as a whole?

Turns out that "randomized value functions" scale to this domain in a natural way... if you do it in the right way!

Maria Dimakopoulou, Ian Osband, Benjamin Van Roy

NeurIPS 2018

[Paper]

A Tutorial on Thompson Sampling

An introduction to, and overview of, the Thompson sampling principle: what does it mean, what are its benefits and how can it be applied? A great overview of what this algorithm really does, without getting too drawn into "regret bounds" and analysis... lot's of good examples!

An introduction to, and overview of, the Thompson sampling principle: what does it mean, what are its benefits and how can it be applied? A great overview of what this algorithm really does, without getting too drawn into "regret bounds" and analysis... lot's of good examples!

Daniel Russo, Benjamin Van Roy, Abbas Kazerouni, Ian Osband, Zheng Wen

Foundations and Trends in Machine Learning 2018

[Paper] [Code]

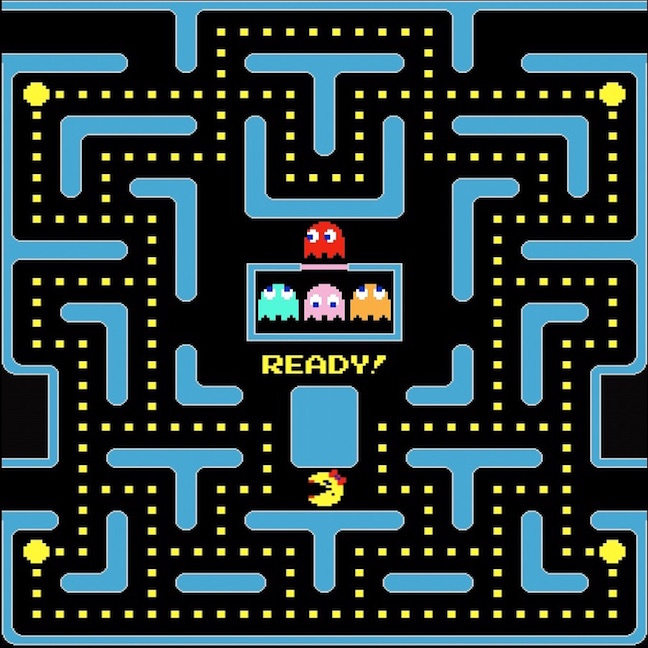

Deep Q-learning from Demonstrations

We very rarely want to deploy an RL system without any prior knowledge of how to behave.

We look at a simple + scalable approach to incorporating expert knowledge with deep RL.

This leads to many state of the art scores on Atari 2600 games.

We very rarely want to deploy an RL system without any prior knowledge of how to behave.

We look at a simple + scalable approach to incorporating expert knowledge with deep RL.

This leads to many state of the art scores on Atari 2600 games.

Todd Hester et al (I'm one of the "al")

AAAI 2018

[arXiv]

Posterior Sampling for Reinforcement Learning without Episodes

Some of the previously published results for posterior sampling without episodic reset are incorrect.

This note clarifies some of the issues in this space and presents some conjectures towards future solutions.

Some of the previously published results for posterior sampling without episodic reset are incorrect.

This note clarifies some of the issues in this space and presents some conjectures towards future solutions.

Ian Osband, Benjamin Van Roy

arXiv technical pre-print 2016

[Paper]

On Lower Bounds for Regret in Reinforcement Learning

A previously published proof for the lower bounds on what is possible for any reinforcement learning algorithm are incorrect.

posterior sampling without episodic reset is incorrect.

This note clarifies some of the issues in this space and presents some further conjectures on what might be true in this space.

A previously published proof for the lower bounds on what is possible for any reinforcement learning algorithm are incorrect.

posterior sampling without episodic reset is incorrect.

This note clarifies some of the issues in this space and presents some further conjectures on what might be true in this space.

Ian Osband, Benjamin Van Roy

arXiv technical pre-print 2016

[Paper]

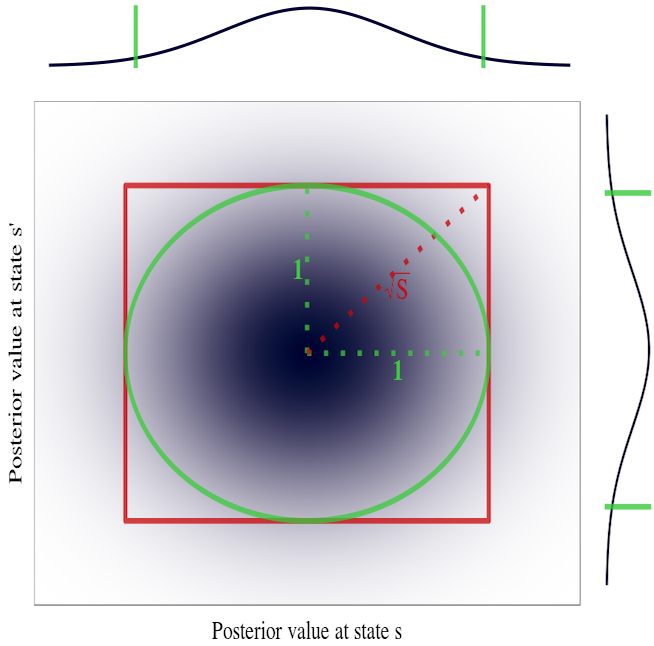

Why is Posterior Sampling Better than Optimism for Reinforcement Learning?

Computational results demonstrate that PSRL dramatically outperforms UCRL2. We provide insight into the extent of this performance boost and the phenomenon that drives it.

Computational results demonstrate that PSRL dramatically outperforms UCRL2. We provide insight into the extent of this performance boost and the phenomenon that drives it.

Ian Osband, Benjamin Van Roy

EWRL 2016 (full oral)

[Paper] [Code]

Deep Exploration via Bootstrapped DQN

Deep exploration and deep reinforcement learning. Takes the insight from efficient exploration via randomized value functions and attains state of the art results on Atari. Includes some sweet vids.

Deep exploration and deep reinforcement learning. Takes the insight from efficient exploration via randomized value functions and attains state of the art results on Atari. Includes some sweet vids.

Ian Osband, Charles Blundell, Alex Pritzel, Benjamin Van Roy

NeurIPS 2016

[Paper] [Videos]

Bootstrapped Thompson Sampling and Deep Exploration

A principled approach to efficient exploration with generalization that can be implemented for deep learning models at scale. Use an augmented bootstrap to approximate the posterior distribution.

A principled approach to efficient exploration with generalization that can be implemented for deep learning models at scale. Use an augmented bootstrap to approximate the posterior distribution.

Ian Osband, Benjamin Van Roy

arXiv technical pre-print 2015

[Paper]

Generalization and Exploration via Randomized Value functions

You can combine efficient exploration and generalization, all without a model-based planning step. Some cool empirical results and also some theory. My favorite paper.

You can combine efficient exploration and generalization, all without a model-based planning step. Some cool empirical results and also some theory. My favorite paper.

Ian Osband, Zheng Wen, Benjamin Van Roy

ICML 2016

[Paper] [Talk] [Poster] [Slides]

Model-based Reinforcement Learning and the Eluder Dimension

The first general analysis of model based RL in terms of the dimensionality, rather than the cardinality, of the system. Several new state of the art results including linear systems.

The first general analysis of model based RL in terms of the dimensionality, rather than the cardinality, of the system. Several new state of the art results including linear systems.

Ian Osband, Benjamin Van Roy

NeurIPS 2014

[Paper] [Poster]

Near-optimal Reinforcement Learning in Factored MDPs

If the environment is a structured graph (aka factored MDP), then you can exploit that to learn quickly. You can adapt UCB-style approaches for this, posterior sampling gets it for free.

If the environment is a structured graph (aka factored MDP), then you can exploit that to learn quickly. You can adapt UCB-style approaches for this, posterior sampling gets it for free.

Ian Osband, Benjamin Van Roy

NeurIPS 2014 (spotlight), INFORMS 2014

[Paper] [Poster] [Spotlight]

(More) Efficient Reinforcement Learning via Posterior Sampling

You don't need to use loose UCB-style algorithms to get regret bounds for reinforcement learning. Posterior sampling is more efficient in terms of computation and data and shares similar gaurantees.

You don't need to use loose UCB-style algorithms to get regret bounds for reinforcement learning. Posterior sampling is more efficient in terms of computation and data and shares similar gaurantees.

Ian Osband, Dan Russo, Benjamin Van Roy

NeurIPS 2013, RLDM 2013

[Paper] [Poster]

Deep Learning for Time Series Modeling

We apply deep learning techniques to energy load forecasting across 20 geographic regions.

We found that recurrent network architectures were particularly suited to this task.

Class project for CS 229 in my first quarter at Stanford.

We apply deep learning techniques to energy load forecasting across 20 geographic regions.

We found that recurrent network architectures were particularly suited to this task.

Class project for CS 229 in my first quarter at Stanford.

Ian Osband, Enso Busseti, Scott Wong

Class project 2012

[Paper]